How to manage a visit budget for large sites

The Internet is an ever-evolving virtual universe with over 1.1 billion web pages.

Do you think Google can crawl every website in the world?

Even with all the resources, money, and data centers Google has, it can’t — or doesn’t want to — crawl the entire web.

What is a tour budget, and is it important?

Visit budget refers to the amount of time and resources Googlebot spends visiting websites in a domain.

Optimizing your site is important so that Google can find your content faster and index your content, which will help your site get better visibility and traffic.

If you have a large website with millions of pages, it’s important to manage your traffic budget to help Google visit your most important pages and get a better understanding of your content.

Google says:

If your website does not have a large number of fast-changing pages, or if your pages seem to be visited the day they are published, updating your sitemap and checking your index coverage regularly is sufficient. Google states that after each page is crawled it must be reviewed, compiled and evaluated to determine where it should be crawled.

Tour budget depends on two main factors: tour capacity limit and demand rate.

Visitor intent is how much Google wants to visit your website. The most popular pages, i.e. the ones with the most popular stories from CNN and the ones with the most significant changes, will get more visits.

Googlebot wants to visit your site without overloading your servers. To prevent this, Googlebot calculates a visit capacity limit, which is the maximum number of concurrent connections Googlebot can use to visit a site, as well as the time delay between visits.

Google takes traffic capacity and demand together and defines a site’s traffic budget as the set of URLs that Googlebot can and wants to visit. If traffic demand is low even if traffic capacity is not reached, Googlebot will visit your site less often.

Here are the top 12 tips for managing traffic budgets for large and medium-sized sites with 10K to millions of URLs.

1. Decide which pages are important and which should not be pulled

Decide which pages are important and which pages are not so important to visit (and therefore Google will not visit often).

Once you have determined through your analysis which pages of your site are worth crawling and which pages of your site are not worth crawling, you can prevent them from being visited.

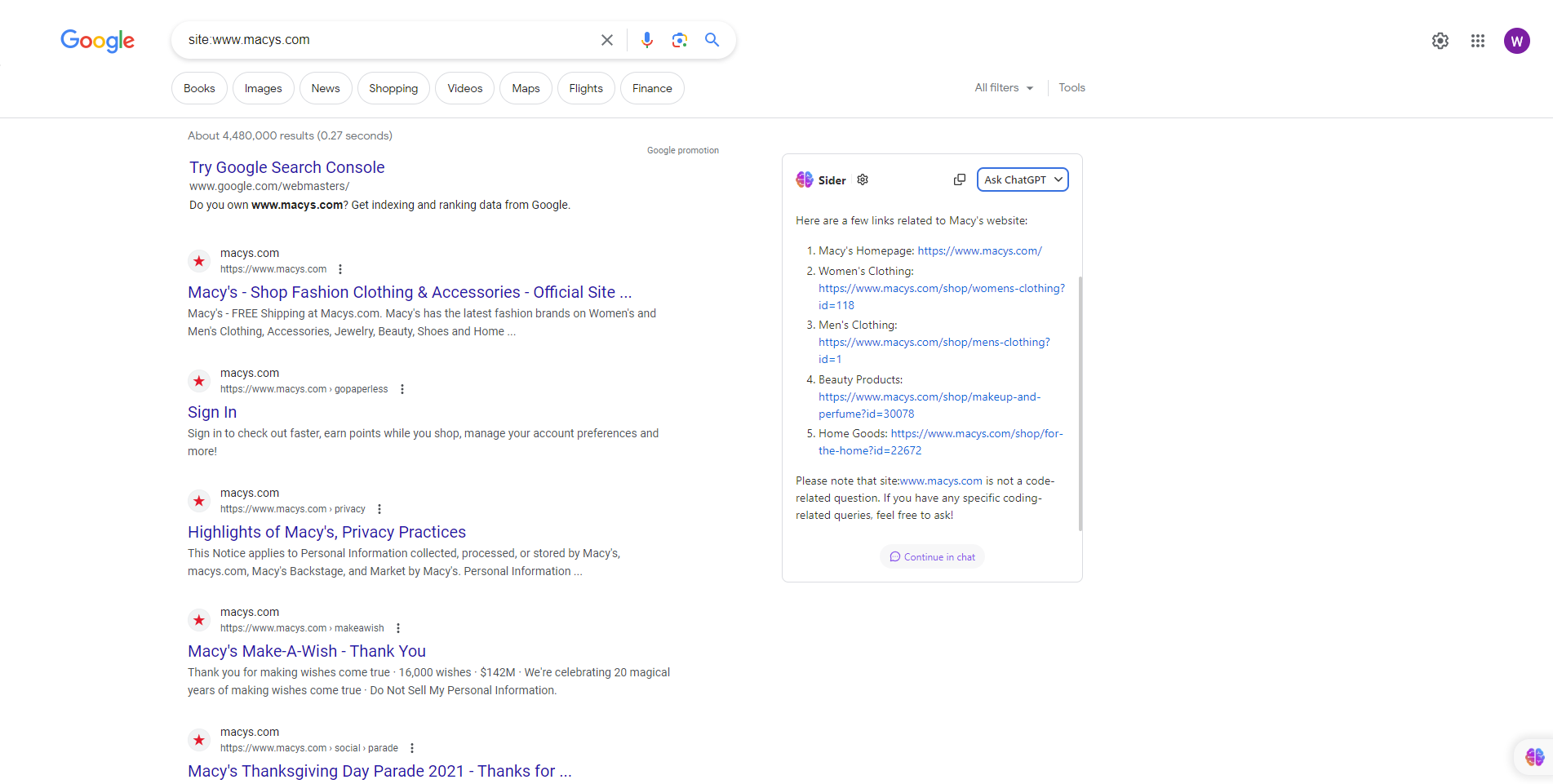

For example, Macys.com has over 2 million pages indexed.

Screenshot from search [site: macys.com]Google June 2023

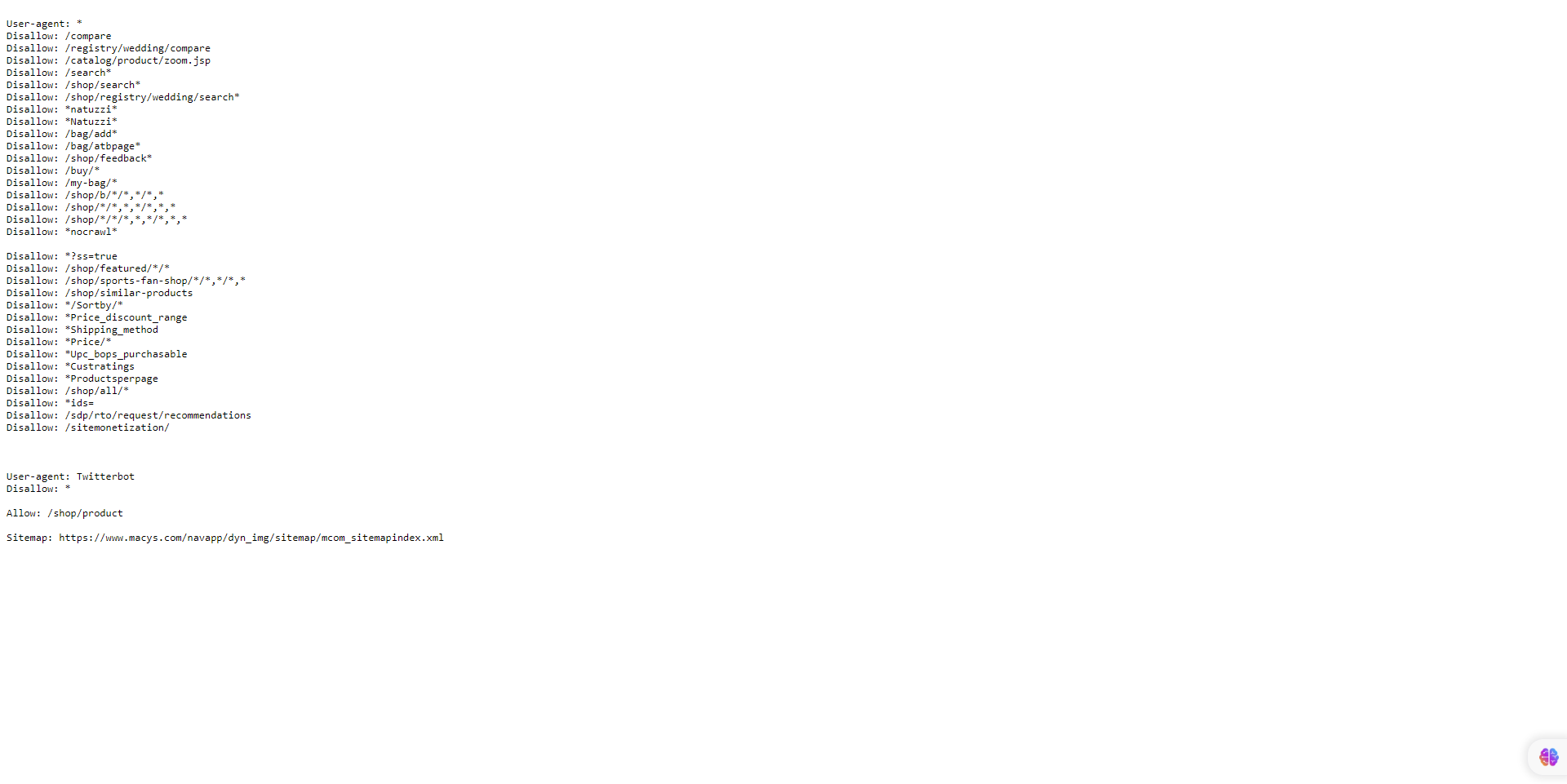

Screenshot from search [site: macys.com]Google June 2023Googlebot manages the visit budget by telling Google not to visit certain pages on the site because it has blocked it from visiting certain URLs in the robots.txt file.

Googlebot may decide it’s not worth the time to look at the rest of your site or increase your visit budget. Front-end browsing and session identifiers: Make sure they are blocked via robots.txt

2. Manage duplicate content

While Google doesn’t penalize duplicate content, you want to provide Googlebot with original and unique information that satisfies the user’s information needs and is relevant and relevant. Make sure you are using the robots.txt file.

Google says it doesn’t use any index, because it still asks, but it drops.

3. Block unwanted URL crawling using Robots.txt and tell Google what pages to crawl

For an enterprise level with millions of pages, Google recommends blocking non-relevant URLs using robots.txt.

Also, you want to make sure that your important pages, directories containing your golden content, and money pages are allowed to be crawled by Googlebot and other search engines.

Author snapshot, June 2023

Author snapshot, June 20234. Long rotation chains

If you can, keep your routing number to a small number. Having too many redirects or traffic loops can confuse Google and reduce your traffic limit.

Google notes that long conversion chains have a negative impact on crawling.

5. Use HTML

Using HTML increases the chances of visitors from any search engine visiting your website.

While Google bots have evolved to crawl and index JavaScript, other search engines are not as sophisticated as Google and may have problems with languages other than HTML.

6. Make sure your websites load quickly and provide a good user experience

Optimize your site for core web vitals.

The faster your content – i.e. in less than three seconds – the faster Google can deliver information to end users. If you like it, Google will continue to index your content as your site shows Google’s crawl health, increasing your visit limit.

7. Have useful content

According to Google, content is rated for quality regardless of age. Create and update your content as needed, but there’s no extra cost to making pages look artificially fresh by making minor changes and updating the page date.

It doesn’t matter if your content is old or new, as long as it satisfies the needs of the end users, i.e. is helpful and relevant.

If users don’t find your content useful and useful, I suggest you update and refresh your content to make it fresh, relevant and useful, and promote it through social media.

Also, link your pages directly to the homepage, which can be seen more important and visited more often.

8. Beware of visiting errors

If you delete some pages on your site, make sure the URL returns to a 404 or 410 status for permanently removed pages. A 404 status code is a strong signal not to visit that URL again.

Blocked URLs, however, remain part of your browsing queue for a long time and will be visited again when the ban is removed.

- Also, Google states that it will remove any soft 404 pages, which will keep you visiting and waste your visiting budget. To test this, log into GSC and review your index coverage report for soft 404 errors.

Conversely, if your site has a lot of 5xx HTTP response status codes (server errors) or connection timeouts, it will slow down. Google recommends paying attention to the Crawl Stats report in Search Console and keeping server errors to a minimum.

By the way, Google does not respect or respect the informal “crawl-delay” robots.txt rule.

Even if you use the nofollow attribute, if another page on your site or any page on the web doesn’t nofollow the link, that page may still get traffic and waste your traffic budget.

9. Update site maps

XML sitemaps are important to help Google find your content and help speed things up.

Update your sitemap URLs for updated content

- Only include URLs that you want to be indexed by search engines.

- Only include URLs that return a 200-status code.

- Make sure a single sitemap file is smaller than 50MB or 50,000 URLs, and if you decide to use multiple sitemaps, create one Index site map It lists them all.

- Check your sitemap UTF-8 encoding is provided..

- Include Links to local edition(s). Each URL. (See Google Docs.)

- Keep your sitemap up-to-date ie update your sitemap Every time there is a new URL or every time an old URL is updated or deleted.

10. Build a good site structure

Having a good site structure is important for your SEO performance index and user experience.

Site structure can affect search engine results pages (SERP) results in a number of ways, including crawling, clicks, and user experience.

Having a clear and straightforward structure to your site can make the most of your traffic budget, helping Googlebot find any new or updated content.

Always remember the three-click rule, that is, any user should be able to get from any page of your site to another in a maximum of three clicks.

11. Internal connection

The easier you make it for search engines to find and navigate your site, the easier it is for visitors to identify your structure, context, and important content.

Having internal links pointing to a web page can help tell Google that this page is important, establish a hierarchy of information for a given web page, and help distribute link equity across your site.

12. Always monitor visit statistics

Always review and monitor your GSC to see if there are any issues with your site crawling and look for ways to make your crawling more efficient.

You can use the Crawl Stats report to see if Googlebot is having any issues with your site.

Look for examples of availability errors or warnings for your site reported in GSC Host presence Graphs of Googlebot requests exceeding the red limit line, click on the graph to see which URLs failed and try to match those to problems on your site.

Also, you can use the URL Checker tool to check a few URLs on your site.

If the URL inspection tool returns host load warnings, that means Googlebot can’t visit as many URLs from your site as it finds.

wrap up

Optimizing the tour budget is critical for large sites due to their size and complexity.

With multiple pages and dynamic content, search engine crawlers face challenges in efficiently and effectively crawling and filtering the site’s content.

By optimizing your traffic budget, site owners can prioritize crawling and indexing of important and optimized pages, ensuring that search engines are spending their resources wisely and efficiently.

This optimization process includes techniques such as optimizing site architecture, managing URL parameters, setting visit priorities and removing duplicate content, leading to better search engine visibility, improved user experience and increased organic traffic for larger websites.

Additional sources:

Featured Image: BestForBest/shutterstock